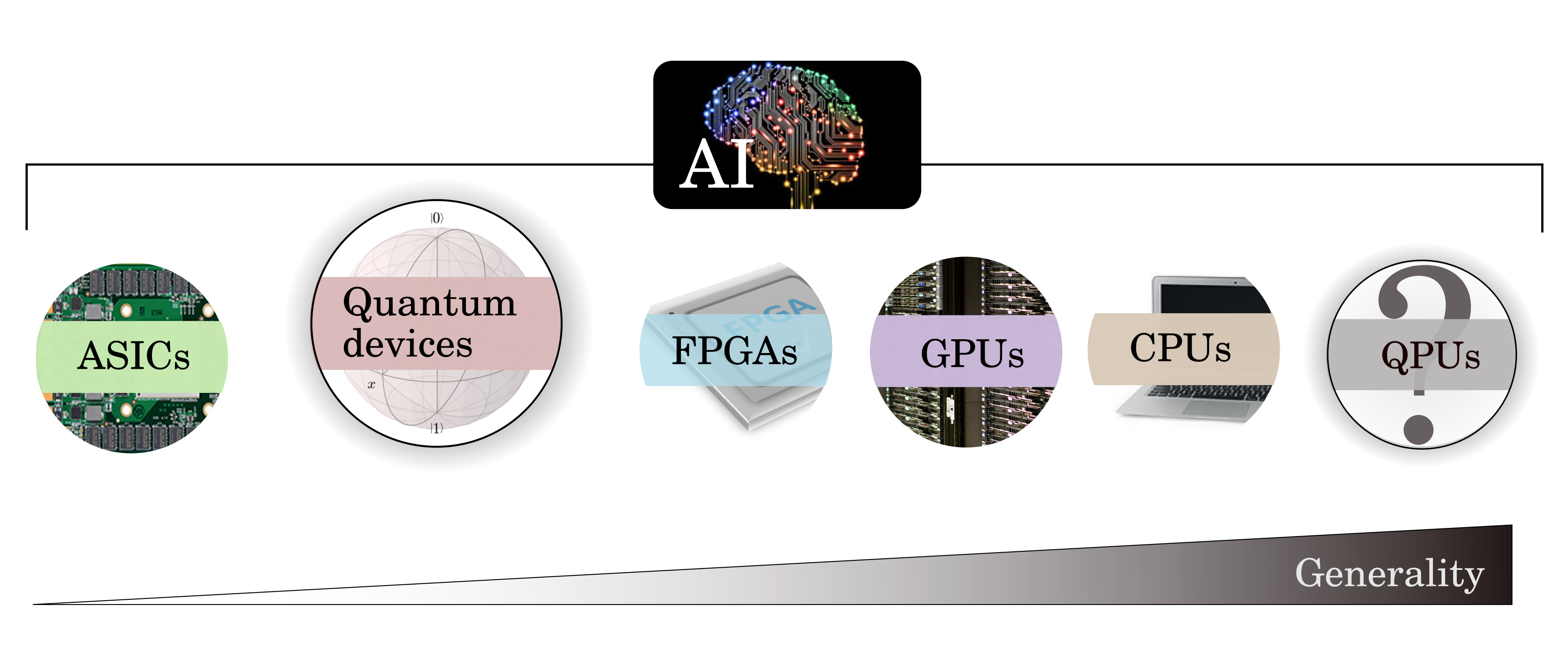

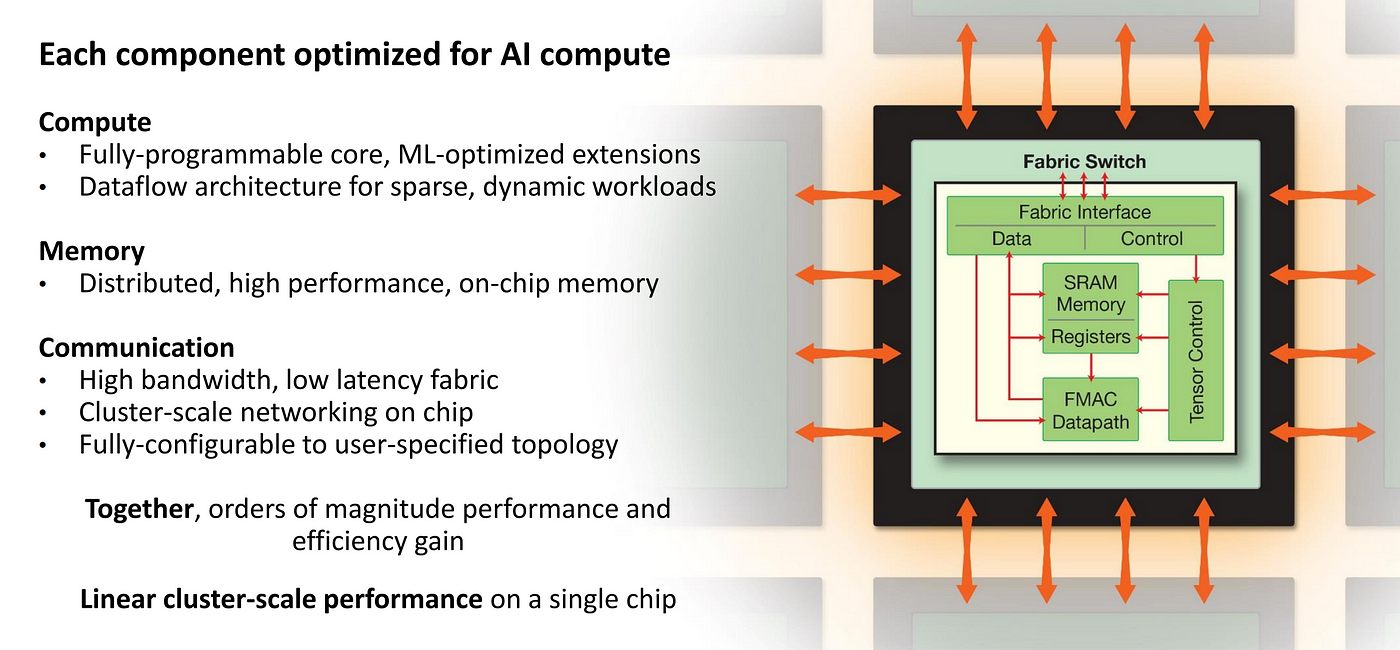

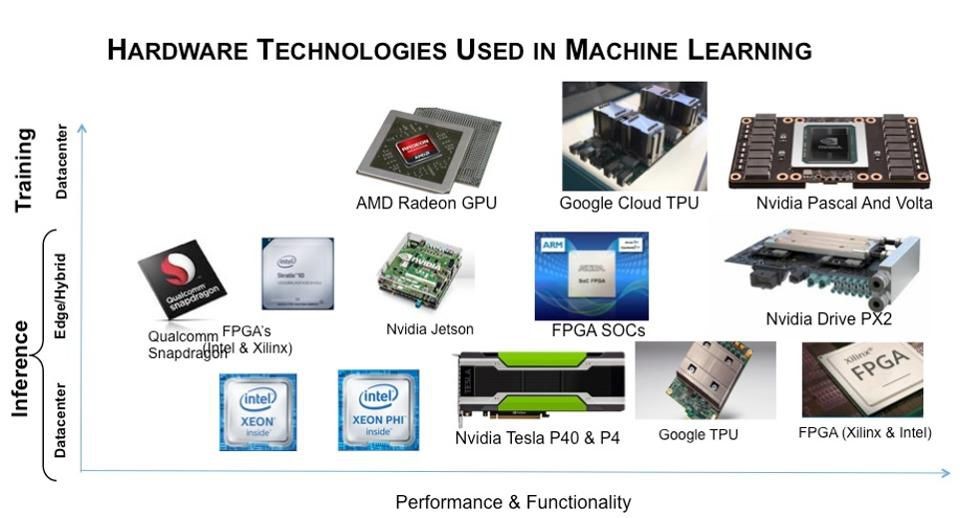

The Deep Learning Inference Acceleration Blog Series — Part 2- Hardware | by Amnon Geifman | Towards Data Science

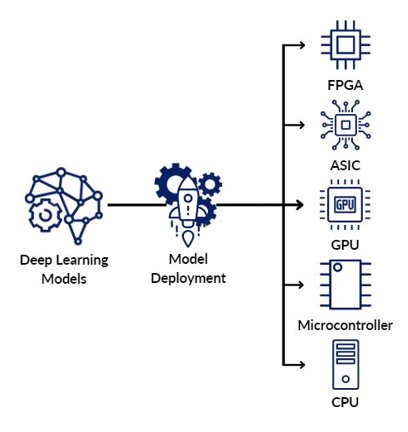

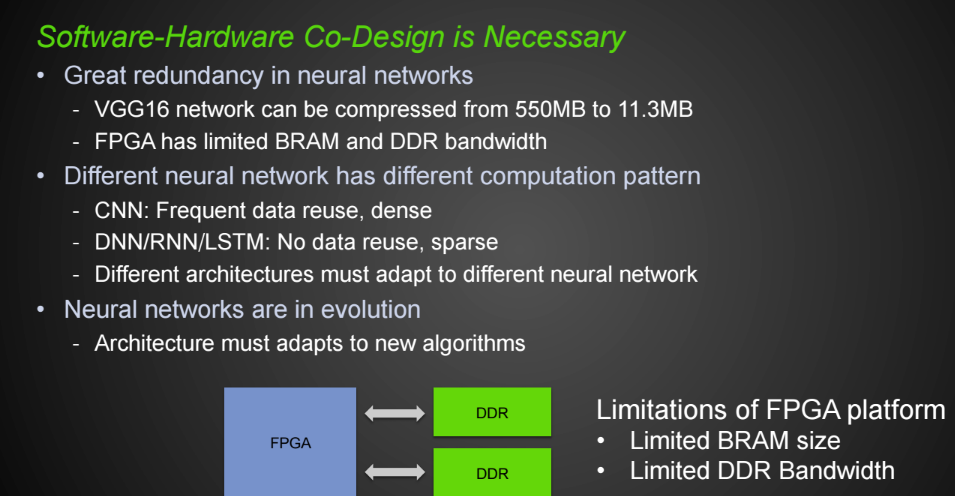

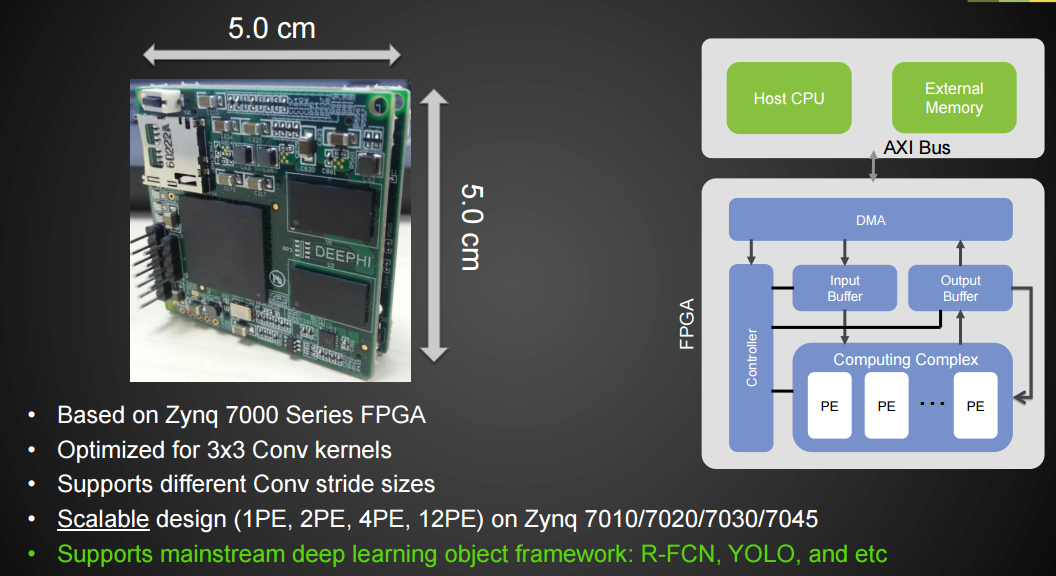

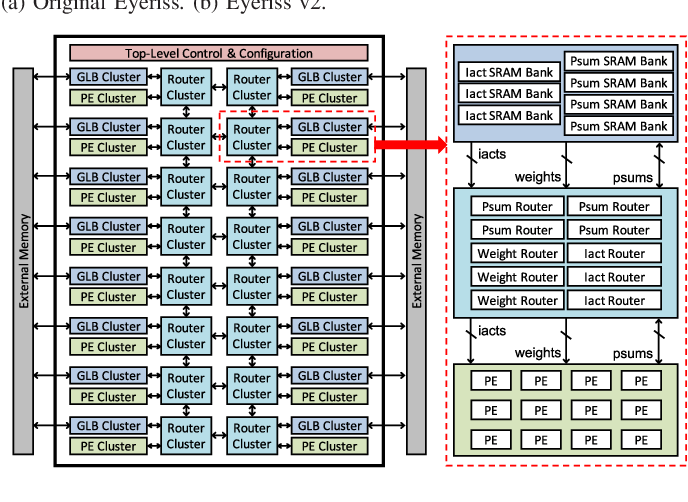

Blog: Aldec Blog - How to develop high-performance deep neural network object detection/recognition applications for FPGA-based edge devices - FirstEDA

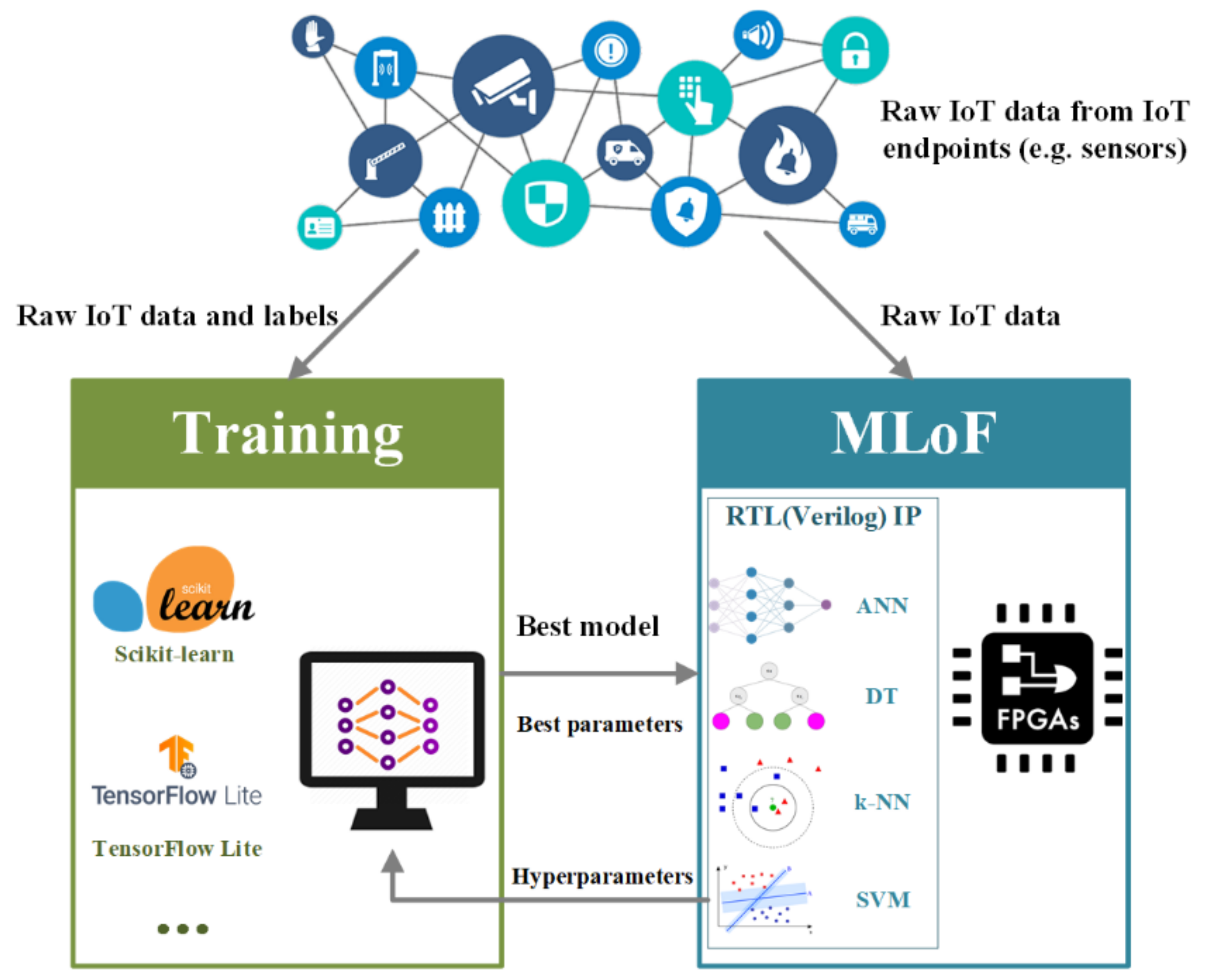

Applied Sciences | Free Full-Text | MLoF: Machine Learning Accelerators for the Low-Cost FPGA Platforms

Blog: Aldec Blog - How to develop high-performance deep neural network object detection/recognition applications for FPGA-based edge devices - FirstEDA